\| where method == "GET" and \_time > ago(24h) | ## Get n events or rows for inspection APL log queries also support `take` as an alias to `limit`. In Splunk, if the results are ordered, `head` returns the first n results. In APL, `limit` isn’t ordered, but it returns the first n rows that are found. | Product | Operator | Example | | ------- | -------- | ---------------------------------------- | | Splunk | head | Sample.Logs=330009.2

\| head 100 | | APL | limit | \['sample-htto-logs']

\| limit 100 | ## Get the first *n* events or rows ordered by a field or column For the bottom results, in Splunk, use `tail`. In APL, specify ordering direction by using `asc`. | Product | Operator | Example | | :------ | :------- | :------------------------------------------------------------------ | | Splunk | head | Sample.Logs="33009.2"

\| sort Event.Sequence

\| head 20 | | APL | top | \['sample-http-logs']

\| top 20 by method | ## Extend the result set with new fields or columns Splunk has an `eval` function, but it’s not comparable to the `eval` operator in APL. Both the `eval` operator in Splunk and the `extend` operator in APL support only scalar functions and arithmetic operators. | Product | Operator | Example | | :------ | :------- | :------------------------------------------------------------------------------------ | | Splunk | eval | Sample.Logs=330009.2

\| eval state= if(Data.Exception = "0", "success", "error") | | APL | extend | \['sample-http-logs']

\| extend Grade = iff(req\_duration\_ms >= 80, "A", "B") | ## Rename APL uses the `project` operator to rename a field. In the `project` operator, a query can take advantage of any indexes that are prebuilt for a field. Splunk has a `rename` operator that does the same. | Product | Operator | Example | | :------ | :------- | :-------------------------------------------------------------- | | Splunk | rename | Sample.Logs=330009.2

\| rename Date.Exception as execption | | APL | project | \['sample-http-logs']

\| project updated\_status = status | ## Format results and projection Splunk uses the `table` command to select which columns to include in the results. APL has a `project` operator that does the same and [more](/apl/tabular-operators/project-operator). | Product | Operator | Example | | :------ | :------- | :--------------------------------------------------- | | Splunk | table | Event.Rule=330009.2

\| table rule, state | | APL | project | \['sample-http-logs']

\| project status, method | Splunk uses the `field -` command to select which columns to exclude from the results. APL has a `project-away` operator that does the same. | Product | Operator | Example | | :------ | :--------------- | :-------------------------------------------------------------- | | Splunk | **fields -** | Sample.Logs=330009.2\`

\| fields - quota, hightest\_seller | | APL | **project-away** | \['sample-http-logs']

\| project-away method, status | ## Aggregation See the [list of summarize aggregations functions](/apl/aggregation-function/statistical-functions) that are available. | Splunk operator | Splunk example | APL operator | APL example | | :-------------- | :------------------------------------------------------------- | :----------- | :----------------------------------------------------------------------- | | **stats** | search (Rule=120502.\*)

\| stats count by OSEnv, Audience | summarize | \['sample-http-logs']

\| summarize count() by content\_type, status | ## Sort In Splunk, to sort in ascending order, you must use the `reverse` operator. APL also supports defining where to put nulls, either at the beginning or at the end. | Product | Operator | Example | | :------ | :------- | :------------------------------------------------------------- | | Splunk | sort | Sample.logs=120103

\| sort Data.Hresult

\| reverse | | APL | order by | \['sample-http-logs']

\| order by status desc | Whether you’re just starting your transition or you’re in the thick of it, this guide can serve as a helpful roadmap to assist you in your journey from Splunk to Axiom Processing Language. Dive into the Axiom Processing Language, start converting your Splunk queries to APL, and explore the rich capabilities of the Query tab. Embrace the learning curve, and remember, every complex query you master is another step forward in your data analytics journey. # Axiom Processing Language (APL) Source: https://axiom.co/docs/apl/introduction This section explains how to use the Axiom Processing Language to get deeper insights from your data. The Axiom Processing Language (APL) is a query language that’s perfect for getting deeper insights from your data. Whether logs, events, analytics, or similar, APL provides the flexibility to filter, manipulate, and summarize your data exactly the way you need it. ## Prerequisites * [Create an Axiom account](https://app.axiom.co/register). * [Create a dataset in Axiom](/reference/datasets#create-dataset) where you send your data. ## Build an APL query APL queries consist of the following: * **Data source:** The most common data source is one of your Axiom datasets. * **Operators:** Operators filter, manipulate, and summarize your data. Delimit operators with the pipe character (`|`). A typical APL query has the following structure: ```kusto DatasetName | Operator ... | Operator ... ``` * `DatasetName` is the name of the dataset you want to query. * `Operator` is an operation you apply to the data.

You can create a token that has access to a single zone, single account or a mix of all these, depending on your needs. For account access, the token must

have theses permissions:

* Logs: Edit

* Account settings: Read

For the zones, only edit permission is required for logs.

## Steps

* Log in to Cloudflare, go to your Cloudflare dashboard, and then select the Enterprise zone (domain) you want to enable Logpush for.

* Optionally, set filters and fields. You can filter logs by field (like Client IP, User Agent, etc.) and set the type of logs you want (for example, HTTP requests, firewall events).

* In Axiom, click **Settings**, select **Apps**, and install the Cloudflare Logpush app with the token you created from the profile settings in Cloudflare.

You can create a token that has access to a single zone, single account or a mix of all these, depending on your needs. For account access, the token must

have theses permissions:

* Logs: Edit

* Account settings: Read

For the zones, only edit permission is required for logs.

## Steps

* Log in to Cloudflare, go to your Cloudflare dashboard, and then select the Enterprise zone (domain) you want to enable Logpush for.

* Optionally, set filters and fields. You can filter logs by field (like Client IP, User Agent, etc.) and set the type of logs you want (for example, HTTP requests, firewall events).

* In Axiom, click **Settings**, select **Apps**, and install the Cloudflare Logpush app with the token you created from the profile settings in Cloudflare.

* You see your available accounts and zones. Select the Cloudflare datasets you want to subscribe to.

* You see your available accounts and zones. Select the Cloudflare datasets you want to subscribe to.

* The installation uses the Cloudflare API to create Logpush jobs for each selected dataset.

* After the installation completes, you can find the installed Logpush jobs at Cloudflare.

For zone-scoped Logpush jobs:

* The installation uses the Cloudflare API to create Logpush jobs for each selected dataset.

* After the installation completes, you can find the installed Logpush jobs at Cloudflare.

For zone-scoped Logpush jobs:

For account-scoped Logpush jobs:

For account-scoped Logpush jobs:

* In the Axiom, you can see your Cloudflare Logpush dashboard.

Using Axiom with Cloudflare Logpush offers a powerful solution for real-time monitoring, observability, and analytics. Axiom can help you gain deep insights into your app’s performance, errors, and app bottlenecks.

### Benefits of using the Axiom Cloudflare Logpush Dashboard

* Real-time visibility into web performance: One of the most crucial features is the ability to see how your website or app is performing in real-time. The dashboard can show everything from page load times to error rates, giving you immediate insights that can help in timely decision-making.

* In the Axiom, you can see your Cloudflare Logpush dashboard.

Using Axiom with Cloudflare Logpush offers a powerful solution for real-time monitoring, observability, and analytics. Axiom can help you gain deep insights into your app’s performance, errors, and app bottlenecks.

### Benefits of using the Axiom Cloudflare Logpush Dashboard

* Real-time visibility into web performance: One of the most crucial features is the ability to see how your website or app is performing in real-time. The dashboard can show everything from page load times to error rates, giving you immediate insights that can help in timely decision-making.

* Actionable insights for troubleshooting: The dashboard doesn’t just provide raw data; it provides insights. Whether it’s an error that needs immediate fixing or performance metrics that show an error from your app, having this information readily available makes it easier to identify problems and resolve them swiftly.

* Actionable insights for troubleshooting: The dashboard doesn’t just provide raw data; it provides insights. Whether it’s an error that needs immediate fixing or performance metrics that show an error from your app, having this information readily available makes it easier to identify problems and resolve them swiftly.

* DNS metrics: Understanding the DNS requests, DNS queries, and DNS cache hit from your app is vital to track if there’s a request spike or get the total number of queries in your system.

* DNS metrics: Understanding the DNS requests, DNS queries, and DNS cache hit from your app is vital to track if there’s a request spike or get the total number of queries in your system.

* Centralized logging and error tracing: With logs coming in from various parts of your app stack, centralizing them within Axiom makes it easier to correlate events across different layers of your infrastructure. This is crucial for troubleshooting complex issues that may span multiple services or components.

* Centralized logging and error tracing: With logs coming in from various parts of your app stack, centralizing them within Axiom makes it easier to correlate events across different layers of your infrastructure. This is crucial for troubleshooting complex issues that may span multiple services or components.

## Supported Cloudflare Logpush Datasets

Axiom supports all the Cloudflare account-scoped datasets.

Zone-scoped

* DNS logs

* Firewall events

* HTTP requests

* NEL reports

* Spectrum events

Account-scoped

* Access requests

* Audit logs

* CASB Findings

* Device posture results

* DNS Firewall Logs

* Gateway DNS

* Gateway HTTP

* Gateway Network

* Magic IDS Detections

* Network Analytics Logs

* Workers Trace Events

* Zero Trust Network Session Logs

# Connect Axiom with Cloudflare Workers

Source: https://axiom.co/docs/apps/cloudflare-workers

This page explains how to enrich your Axiom experience with Cloudflare Workers.

The Axiom Cloudflare Workers app provides granular detail about the traffic coming in from your monitored sites. This includes edge requests, static resources, client auth, response duration, and status. Axiom gives you an all-at-once view of key Cloudflare Workers metrics and logs, out of the box, with our dynamic Cloudflare Workers dashboard.

The data obtained with the Axiom dashboard gives you better insights into the state of your Cloudflare Workers so you can easily monitor bad requests, popular URLs, cumulative execution time, successful requests, and more. The app is part of Axiom’s unified logging and observability platform, so you can easily track Cloudflare Workers edge requests alongside a comprehensive view of other resources in your Cloudflare Worker environments.

## Supported Cloudflare Logpush Datasets

Axiom supports all the Cloudflare account-scoped datasets.

Zone-scoped

* DNS logs

* Firewall events

* HTTP requests

* NEL reports

* Spectrum events

Account-scoped

* Access requests

* Audit logs

* CASB Findings

* Device posture results

* DNS Firewall Logs

* Gateway DNS

* Gateway HTTP

* Gateway Network

* Magic IDS Detections

* Network Analytics Logs

* Workers Trace Events

* Zero Trust Network Session Logs

# Connect Axiom with Cloudflare Workers

Source: https://axiom.co/docs/apps/cloudflare-workers

This page explains how to enrich your Axiom experience with Cloudflare Workers.

The Axiom Cloudflare Workers app provides granular detail about the traffic coming in from your monitored sites. This includes edge requests, static resources, client auth, response duration, and status. Axiom gives you an all-at-once view of key Cloudflare Workers metrics and logs, out of the box, with our dynamic Cloudflare Workers dashboard.

The data obtained with the Axiom dashboard gives you better insights into the state of your Cloudflare Workers so you can easily monitor bad requests, popular URLs, cumulative execution time, successful requests, and more. The app is part of Axiom’s unified logging and observability platform, so you can easily track Cloudflare Workers edge requests alongside a comprehensive view of other resources in your Cloudflare Worker environments.

## What is a Grafana data source plugin?

Grafana is an open-source tool for time-series analytics, visualization, and alerting. It’s frequently used in DevOps and IT Operations roles to provide real-time information on system health and performance.

Data sources in Grafana are the actual databases or services where the data is stored. Grafana has a variety of data source plugins that connect Grafana to different types of databases or services. This enables Grafana to query those sources from display that data on its dashboards. The data sources can be anything from traditional SQL databases to time-series databases or metrics, and logs from Axiom.

A Grafana data source plugin extends the functionality of Grafana by allowing it to interact with a specific type of data source. These plugins enable users to extract data from a variety of different sources, not just those that come supported by default in Grafana.

## Prerequisites

* [Create an Axiom account](https://app.axiom.co/).

* [Create a dataset in Axiom](/reference/datasets) where you send your data.

* [Create an API token in Axiom](/reference/tokens) with permissions to create, read, update, and delete datasets.

## Install the Axiom Grafana data source plugin on Grafana Cloud

* In Grafana, click Administration > Plugins in the side navigation menu to view installed plugins.

* In the filter bar, search for the Axiom plugin

* Click on the plugin logo.

* Click Install.

## What is a Grafana data source plugin?

Grafana is an open-source tool for time-series analytics, visualization, and alerting. It’s frequently used in DevOps and IT Operations roles to provide real-time information on system health and performance.

Data sources in Grafana are the actual databases or services where the data is stored. Grafana has a variety of data source plugins that connect Grafana to different types of databases or services. This enables Grafana to query those sources from display that data on its dashboards. The data sources can be anything from traditional SQL databases to time-series databases or metrics, and logs from Axiom.

A Grafana data source plugin extends the functionality of Grafana by allowing it to interact with a specific type of data source. These plugins enable users to extract data from a variety of different sources, not just those that come supported by default in Grafana.

## Prerequisites

* [Create an Axiom account](https://app.axiom.co/).

* [Create a dataset in Axiom](/reference/datasets) where you send your data.

* [Create an API token in Axiom](/reference/tokens) with permissions to create, read, update, and delete datasets.

## Install the Axiom Grafana data source plugin on Grafana Cloud

* In Grafana, click Administration > Plugins in the side navigation menu to view installed plugins.

* In the filter bar, search for the Axiom plugin

* Click on the plugin logo.

* Click Install.

When the update is complete, a confirmation message is displayed, indicating that the installation was successful.

* The Axiom Grafana Plugin is also installable from the [Grafana Plugins page](https://grafana.com/grafana/plugins/axiomhq-axiom-datasource/)

When the update is complete, a confirmation message is displayed, indicating that the installation was successful.

* The Axiom Grafana Plugin is also installable from the [Grafana Plugins page](https://grafana.com/grafana/plugins/axiomhq-axiom-datasource/)

## Install the Axiom Grafana data source plugin on local Grafana

The Axiom data source plugin for Grafana is [open source on GitHub](https://github.com/axiomhq/axiom-grafana). It can be installed via the Grafana CLI, or via Docker.

### Install the Axiom Grafana Plugin using Grafana CLI

```bash

grafana-cli plugins install axiomhq-axiom-datasource

```

### Install Via Docker

* Add the plugin to your `docker-compose.yml` or `Dockerfile`

* Set the environment variable `GF_INSTALL_PLUGINS` to include the plugin

Example:

`GF_INSTALL_PLUGINS="axiomhq-axiom-datasource"`

## Configuration

* Add a new data source in Grafana

* Select the Axiom data source type.

## Install the Axiom Grafana data source plugin on local Grafana

The Axiom data source plugin for Grafana is [open source on GitHub](https://github.com/axiomhq/axiom-grafana). It can be installed via the Grafana CLI, or via Docker.

### Install the Axiom Grafana Plugin using Grafana CLI

```bash

grafana-cli plugins install axiomhq-axiom-datasource

```

### Install Via Docker

* Add the plugin to your `docker-compose.yml` or `Dockerfile`

* Set the environment variable `GF_INSTALL_PLUGINS` to include the plugin

Example:

`GF_INSTALL_PLUGINS="axiomhq-axiom-datasource"`

## Configuration

* Add a new data source in Grafana

* Select the Axiom data source type.

* Enter the previously generated API token.

* Save and test the data source.

## Build Queries with Query Editor

The Axiom data source Plugin provides a custom query editor to build and visualize your Axiom event data. After configuring the Axiom data source, start building visualizations from metrics and logs stored in Axiom.

* Create a new panel in Grafana by clicking on Add visualization

* Enter the previously generated API token.

* Save and test the data source.

## Build Queries with Query Editor

The Axiom data source Plugin provides a custom query editor to build and visualize your Axiom event data. After configuring the Axiom data source, start building visualizations from metrics and logs stored in Axiom.

* Create a new panel in Grafana by clicking on Add visualization

* Select the Axiom data source.

* Select the Axiom data source.

* Use the query editor to choose the desired metrics, dimensions, and filters.

* Use the query editor to choose the desired metrics, dimensions, and filters.

## Benefits of the Axiom Grafana data source plugin

The Axiom Grafana data source plugin allows users to display and interact with their Axiom data directly from within Grafana. By doing so, it provides several advantages:

1. **Unified visualization:** The Axiom Grafana data source plugin allows users to utilize Grafana’s powerful visualization tools with Axiom’s data. This enables users to create, explore, and share dashboards which visually represent their Axiom logs and metrics.

## Benefits of the Axiom Grafana data source plugin

The Axiom Grafana data source plugin allows users to display and interact with their Axiom data directly from within Grafana. By doing so, it provides several advantages:

1. **Unified visualization:** The Axiom Grafana data source plugin allows users to utilize Grafana’s powerful visualization tools with Axiom’s data. This enables users to create, explore, and share dashboards which visually represent their Axiom logs and metrics.

2. **Rich Querying Capability:** Grafana has a powerful and flexible interface for building data queries. With the Axiom plugin, and leverage this capability to build complex queries against your Axiom data.

2. **Rich Querying Capability:** Grafana has a powerful and flexible interface for building data queries. With the Axiom plugin, and leverage this capability to build complex queries against your Axiom data.

3. **Customizable Alerting:** Grafana’s alerting feature allows you to set alerts based on your queries' results, and set up custom alerts based on specific conditions in your Axiom log data.

4. **Sharing and Collaboration:** Grafana’s features for sharing and collaboration can help teams work together more effectively. Share Axiom data visualizations with others, collaborate on dashboards, and discuss insights directly in Grafana.

3. **Customizable Alerting:** Grafana’s alerting feature allows you to set alerts based on your queries' results, and set up custom alerts based on specific conditions in your Axiom log data.

4. **Sharing and Collaboration:** Grafana’s features for sharing and collaboration can help teams work together more effectively. Share Axiom data visualizations with others, collaborate on dashboards, and discuss insights directly in Grafana.

# Map location data with Axiom and Hex

Source: https://axiom.co/docs/apps/hex

This page exlains how to visualize geospatial log data from Axiom using Hex interactive maps.

Hex is a powerful collaborative data platform that allows you to create notebooks with Python/SQL code and interactive visualizations.

This page explains how to integrate Hex with Axiom to visualize geospatial data from your logs. You ingest location data into Axiom, query it using APL, and create interactive map visualizations in Hex.

## Prerequisites

* [Create an Axiom account](https://app.axiom.co/register).

* [Create a dataset in Axiom](/reference/datasets#create-dataset) where you send your data.

* [Create an API token in Axiom](/reference/tokens) with permissions to update the dataset you have created.

- [Create a Hex account](https://app.hex.tech/).

## Send geospatial data to Axiom

Send your sample location data to Axiom using the API endpoint. For example, the following HTTP request sends sample robot location data with latitude, longitude, status, and satellite information.

```bash

curl -X 'POST' 'https://AXIOM_DOMAIN/v1/datasets/DATASET_NAME/ingest' \

-H 'Authorization: Bearer API_TOKEN' \

-H 'Content-Type: application/json' \

-d '[

{

"data": {

"robot_id": "robot-001",

"latitude": 37.7749,

"longitude": -122.4194,

"num_satellites": 8,

"status": "active"

}

}

]'

```

# Map location data with Axiom and Hex

Source: https://axiom.co/docs/apps/hex

This page exlains how to visualize geospatial log data from Axiom using Hex interactive maps.

Hex is a powerful collaborative data platform that allows you to create notebooks with Python/SQL code and interactive visualizations.

This page explains how to integrate Hex with Axiom to visualize geospatial data from your logs. You ingest location data into Axiom, query it using APL, and create interactive map visualizations in Hex.

## Prerequisites

* [Create an Axiom account](https://app.axiom.co/register).

* [Create a dataset in Axiom](/reference/datasets#create-dataset) where you send your data.

* [Create an API token in Axiom](/reference/tokens) with permissions to update the dataset you have created.

- [Create a Hex account](https://app.hex.tech/).

## Send geospatial data to Axiom

Send your sample location data to Axiom using the API endpoint. For example, the following HTTP request sends sample robot location data with latitude, longitude, status, and satellite information.

```bash

curl -X 'POST' 'https://AXIOM_DOMAIN/v1/datasets/DATASET_NAME/ingest' \

-H 'Authorization: Bearer API_TOKEN' \

-H 'Content-Type: application/json' \

-d '[

{

"data": {

"robot_id": "robot-001",

"latitude": 37.7749,

"longitude": -122.4194,

"num_satellites": 8,

"status": "active"

}

}

]'

```

## Monitor Lambda functions and usage in Axiom

Having real-time visibility into your function logs is important because any duration between sending your lambda request and the execution time can cause a delay and adds to customer-facing latency. You need to be able to measure and track your Lambda invocations, maximum and minimum execution time, and all invocations by function.

## Monitor Lambda functions and usage in Axiom

Having real-time visibility into your function logs is important because any duration between sending your lambda request and the execution time can cause a delay and adds to customer-facing latency. You need to be able to measure and track your Lambda invocations, maximum and minimum execution time, and all invocations by function.

The Axiom Lambda Extension gives you full visibility into the most important metrics and logs coming from your Lambda function out of the box without any further configuration required.

The Axiom Lambda Extension gives you full visibility into the most important metrics and logs coming from your Lambda function out of the box without any further configuration required.

## Track cold start on your Lambda function

A cold start occurs when there’s a delay between your invocation and runtime created during the initialization process. During this period, there’s no available function instance to respond to an invocation. With the Axiom built-in Serverless AWS Lambda dashboard, you can track and see the effect of cold start on your Lambda functions and its impact on every Lambda function. This data lets you know when to take actionable steps, such as using provisioned concurrency or reducing function dependencies.

## Track cold start on your Lambda function

A cold start occurs when there’s a delay between your invocation and runtime created during the initialization process. During this period, there’s no available function instance to respond to an invocation. With the Axiom built-in Serverless AWS Lambda dashboard, you can track and see the effect of cold start on your Lambda functions and its impact on every Lambda function. This data lets you know when to take actionable steps, such as using provisioned concurrency or reducing function dependencies.

## Optimize slow-performing Lambda queries

Grouping logs with Lambda invocations and execution time by function provides insights into your events request and response pattern. You can extend your query to view when an invocation request is rejected and configure alerts to be notified on Serverless log patterns and Lambda function payloads. With the invocation request dashboard, you can monitor request function logs and see how your Lambda serverless functions process your events and Lambda queues over time.

## Optimize slow-performing Lambda queries

Grouping logs with Lambda invocations and execution time by function provides insights into your events request and response pattern. You can extend your query to view when an invocation request is rejected and configure alerts to be notified on Serverless log patterns and Lambda function payloads. With the invocation request dashboard, you can monitor request function logs and see how your Lambda serverless functions process your events and Lambda queues over time.

## Detect timeout on your Lambda function

Axiom Lambda function monitors let you identify the different points of invocation failures, cold-start delays, and AWS Lambda errors on your Lambda functions. With standard function logs like invocations by function, and Lambda cold start, monitoring the rate of your execution time can alert you to be aware of a significant spike whenever an error occurs in your Lambda function.

## Detect timeout on your Lambda function

Axiom Lambda function monitors let you identify the different points of invocation failures, cold-start delays, and AWS Lambda errors on your Lambda functions. With standard function logs like invocations by function, and Lambda cold start, monitoring the rate of your execution time can alert you to be aware of a significant spike whenever an error occurs in your Lambda function.

## Smart filters

Axiom Lambda Serverless Smart Filters lets you easily filter down to specific AWS Lambda functions or Serverless projects and use saved queries to get deep insights on how functions are performing with a single click.

## Smart filters

Axiom Lambda Serverless Smart Filters lets you easily filter down to specific AWS Lambda functions or Serverless projects and use saved queries to get deep insights on how functions are performing with a single click.

# Connect Axiom with Netlify

Source: https://axiom.co/docs/apps/netlify

Integrating Axiom with Netlify to get a comprehensive observability experience for your Netlify projects. This app will give you a better understanding of how your Jamstack apps are performing.

Integrate Axiom with Netlify to get a comprehensive observability experience for your Netlify projects. This integration will give you a better understanding of how your Jamstack apps are performing.

You can easily monitor logs and metrics related to your website traffic, serverless functions, and app requests. The integration is easy to set up, and you don’t need to configure anything to get started.

With Axiom’s Zero-Config Observability app, you can see all your metrics in real-time, without sampling. That means you can get a complete view of your app’s performance without any gaps in data.

Axiom’s Netlify app is complete with a pre-built dashboard that gives you control over your Jamstack projects. You can use this dashboard to track key metrics and make informed decisions about your app’s performance.

Overall, the Axiom Netlify app makes it easy to monitor and optimize your Jamstack apps. However, do note that this integration is only available for Netlify customers enterprise-level plans where [Log Drains are supported](https://docs.netlify.com/monitor-sites/log-drains/).

## What is Netlify

Netlify is a platform for building highly-performant and dynamic websites, e-commerce stores, and web apps. Netlify automatically builds your site and deploys it across its global edge network.

The Netlify platform provides teams everything they need to take modern web projects from the first preview to full production.

## Sending logs to Axiom

The log events gotten from Axiom gives you better insight into the state of your Netlify sites environment so that you can easily monitor traffic volume, website configurations, function logs, resource usage, and more.

1. Simply login to your [Axiom account](https://app.axiom.co/), click on **Apps** from the **Settings** menu, select the **Netlify app** and click on **Install now**.

# Connect Axiom with Netlify

Source: https://axiom.co/docs/apps/netlify

Integrating Axiom with Netlify to get a comprehensive observability experience for your Netlify projects. This app will give you a better understanding of how your Jamstack apps are performing.

Integrate Axiom with Netlify to get a comprehensive observability experience for your Netlify projects. This integration will give you a better understanding of how your Jamstack apps are performing.

You can easily monitor logs and metrics related to your website traffic, serverless functions, and app requests. The integration is easy to set up, and you don’t need to configure anything to get started.

With Axiom’s Zero-Config Observability app, you can see all your metrics in real-time, without sampling. That means you can get a complete view of your app’s performance without any gaps in data.

Axiom’s Netlify app is complete with a pre-built dashboard that gives you control over your Jamstack projects. You can use this dashboard to track key metrics and make informed decisions about your app’s performance.

Overall, the Axiom Netlify app makes it easy to monitor and optimize your Jamstack apps. However, do note that this integration is only available for Netlify customers enterprise-level plans where [Log Drains are supported](https://docs.netlify.com/monitor-sites/log-drains/).

## What is Netlify

Netlify is a platform for building highly-performant and dynamic websites, e-commerce stores, and web apps. Netlify automatically builds your site and deploys it across its global edge network.

The Netlify platform provides teams everything they need to take modern web projects from the first preview to full production.

## Sending logs to Axiom

The log events gotten from Axiom gives you better insight into the state of your Netlify sites environment so that you can easily monitor traffic volume, website configurations, function logs, resource usage, and more.

1. Simply login to your [Axiom account](https://app.axiom.co/), click on **Apps** from the **Settings** menu, select the **Netlify app** and click on **Install now**.

* It’ll redirect you to Netlify to authorize Axiom.

* It’ll redirect you to Netlify to authorize Axiom.

* Click **Authorize**, and then copy the integration token.

* Click **Authorize**, and then copy the integration token.

2. Log into your **Netlify Team Account**, click on your site settings and select **Log Drains**.

* In your log drain service, select **Axiom**, paste the integration token from Step 1, and then click **Connect**.

2. Log into your **Netlify Team Account**, click on your site settings and select **Log Drains**.

* In your log drain service, select **Axiom**, paste the integration token from Step 1, and then click **Connect**.

## App overview

### Traffic and function Logs

With Axiom, you can instrument, and actively monitor your Netlify sites, stream your build logs, and analyze your deployment process, or use our pre-build Netlify Dashboard to get an overview of all the important traffic data, usage, and metrics. Various logs will be produced when users collaborate and interact with your sites and websites hosted on Netlify. Axiom captures and ingests all these logs into the `netlify` dataset.

You can also drill down to your site source with our advanced query language and fork our dashboard to start building your own site monitors.

* Back in your Axiom datasets console you'll see all your traffic and function logs in your `netlify` dataset.

## App overview

### Traffic and function Logs

With Axiom, you can instrument, and actively monitor your Netlify sites, stream your build logs, and analyze your deployment process, or use our pre-build Netlify Dashboard to get an overview of all the important traffic data, usage, and metrics. Various logs will be produced when users collaborate and interact with your sites and websites hosted on Netlify. Axiom captures and ingests all these logs into the `netlify` dataset.

You can also drill down to your site source with our advanced query language and fork our dashboard to start building your own site monitors.

* Back in your Axiom datasets console you'll see all your traffic and function logs in your `netlify` dataset.

### Live stream logs

Stream your sites and app logs live, and filter them to see important information.

### Live stream logs

Stream your sites and app logs live, and filter them to see important information.

### Zero-config dashboard for your Netlify sites

Use our pre-build Netlify Dashboard to get an overview of all the important metrics. When ready, you can fork our dashboard and start building your own!

### Zero-config dashboard for your Netlify sites

Use our pre-build Netlify Dashboard to get an overview of all the important metrics. When ready, you can fork our dashboard and start building your own!

## Start logging Netlify Sites today

Axiom Netlify integration allows you to monitor, and log all of your sites, and apps in one place. With the Axiom app, you can quickly detect site errors, and get high-level insights into your Netlify projects.

* We welcome ideas, feedback, and collaboration, join us in our [Discord Community](http://axiom.co/discord) to share them with us.

# Connect Axiom with Tailscale

Source: https://axiom.co/docs/apps/tailscale

This page explains how to integrate Axiom with Tailscale.

Tailscale is a secure networking solution that allows you to create and manage a private network (tailnet), securely connecting all your devices.

Integrating Axiom with Tailscale allows you to stream your audit and network flow logs directly to Axiom seamlessly, unlocking powerful insights and analysis. Whether you’re conducting a security audit, optimizing performance, or ensuring compliance, Axiom’s Tailscale dashboard equips you with the tools to maintain a secure and efficient network, respond quickly to potential issues, and make informed decisions about your network configuration and usage.

## Prerequisites

* [Create an Axiom account](https://app.axiom.co/register).

* [Create a dataset in Axiom](/reference/datasets#create-dataset) where you send your data.

* [Create an API token in Axiom](/reference/tokens) with permissions to update the dataset you have created.

- [Create a Tailscale account](https://login.tailscale.com/start).

## Setup

1. In Tailscale, go to the [configuration logs page](https://login.tailscale.com/admin/logs) of the admin console.

2. Add Axiom as a configuration log streaming destination in Tailscale. For more information, see the [Tailscale documentation](https://tailscale.com/kb/1255/log-streaming?q=stream#add-a-configuration-log-streaming-destination).

## Tailscale dashboard

Axiom displays the data it receives in a pre-built Tailscale dashboard that delivers immediate, actionable insights into your tailnet’s activity and health.

This comprehensive overview includes:

* **Log type distribution**: Understand the balance between configuration audit logs and network flow logs over time.

* **Top actions and hosts**: Identify the most common network actions and most active devices.

* **Traffic visualization**: View physical, virtual, and exit traffic patterns for both sources and destinations.

* **User activity tracking**: Monitor actions by user display name, email, and ID for security audits and compliance.

* **Configuration log stream**: Access a detailed audit trail of all configuration changes.

With these insights, you can:

* Quickly identify unusual network activity or traffic patterns.

* Track configuration changes and user actions.

* Monitor overall network health and performance.

* Investigate specific events or users as needed.

* Understand traffic distribution across your tailnet.

# Connect Axiom with Terraform

Source: https://axiom.co/docs/apps/terraform

Provision and manage Axiom resources such as datasets and monitors with Terraform.

Axiom Terraform Provider lets you provision and manage Axiom resources (datasets, notifiers, monitors, and users) with Terraform. This means that you can programmatically create resources, access existing ones, and perform further infrastructure automation tasks.

Install the Axiom Terraform Provider from the [Terraform Registry](https://registry.terraform.io/providers/axiomhq/axiom/latest). To see the provider in action, check out the [example](https://github.com/axiomhq/terraform-provider-axiom/blob/main/example/main.tf).

This guide explains how to install the provider and perform some common procedures such as creating new resources and accessing existing ones. For the full API reference, see the [documentation in the Terraform Registry](https://registry.terraform.io/providers/axiomhq/axiom/latest/docs).

## Prerequisites

* [Sign up for a free Axiom account](https://app.axiom.co/register). All you need is an email address.

* [Create an advanced API token in Axiom](/reference/tokens#create-advanced-api-token) with the permissions to perform the actions you want to use Terraform for. For example, to use Terraform to create and update datasets, create the advanced API token with these permissions.

* [Create a Terraform account](https://app.terraform.io/signup/account).

* [Install the Terraform CLI](https://developer.hashicorp.com/terraform/cli).

## Install the provider

To install the Axiom Terraform Provider from the [Terraform Registry](https://registry.terraform.io/providers/axiomhq/axiom/latest), follow these steps:

1. Add the following code to your Terraform configuration file. Replace `API_TOKEN` with the Axiom API token you have generated. For added security, store the API token in an environment variable.

```hcl

terraform {

required_providers {

axiom = {

source = "axiomhq/axiom"

}

}

}

provider "axiom" {

api_token = "API_TOKEN"

}

```

2. In your terminal, go to the folder of your main Terraform configuration file, and then run the command `terraform init`.

## Create new resources

### Create dataset

To create a dataset in Axiom using the provider, add the following code to your Terraform configuration file. Customize the `name` and `description` fields.

```hcl

resource "axiom_dataset" "test_dataset" {

name = "test_dataset"

description = "This is a test dataset created by Terraform."

}

```

### Create notifier

To create a Slack notifier in Axiom using the provider, add the following code to your Terraform configuration file. Replace `SLACK_URL` with the webhook URL from your Slack instance. For more information on obtaining this URL, see the [Slack documentation](https://api.slack.com/messaging/webhooks).

```hcl

resource "axiom_notifier" "test_slack_notifier" {

name = "test_slack_notifier"

properties = {

slack = {

slack_url = "SLACK_URL"

}

}

}

```

To create a Discord notifier in Axiom using the provider, add the following code to your Terraform configuration file.

* Replace `DISCORD_CHANNEL` with the webhook URL from your Discord instance. For more information on obtaining this URL, see the [Discord documentation](https://discord.com/developers/resources/webhook).

* Replace `DISCORD_TOKEN` with your Discord API token. For more information on obtaining this token, see the [Discord documentation](https://discord.com/developers/topics/oauth2).

```hcl

resource "axiom_notifier" "test_discord_notifier" {

name = "test_discord_notifier"

properties = {

discord = {

discord_channel = "DISCORD_CHANNEL"

discord_token = "DISCORD_TOKEN"

}

}

}

```

To create an email notifier in Axiom using the provider, add the following code to your Terraform configuration file. Replace `EMAIL1` and `EMAIL2` with the email addresses you want to notify.

```hcl

resource "axiom_notifier" "test_email_notifier" {

name = "test_email_notifier"

properties = {

email= {

emails = ["EMAIL1","EMAIL2"]

}

}

}

```

For more information on the types of notifier you can create, see the [documentation in the Terraform Registry](https://registry.terraform.io/providers/axiomhq/axiom/latest/resources/notifier).

### Create monitor

To create a monitor in Axiom using the provider, add the following code to your Terraform configuration file and customize it:

```hcl

resource "axiom_monitor" "test_monitor" {

depends_on = [axiom_dataset.test_dataset, axiom_notifier.test_slack_notifier]

# `type` can be one of the following:

# - "Threshold": For numeric values against thresholds. It requires `operator` and `threshold`.

# - "MatchEvent": For detecting specific events. It doesn’t require `operator` and `threshold`.

# - "AnomalyDetection": For detecting anomalies. It requires `compare_days` and `tolerance, operator`.

type = "Threshold"

name = "test_monitor"

description = "This is a test monitor created by Terraform."

apl_query = "['test_dataset'] | summarize count() by bin_auto(_time)"

interval_minutes = 5

# `operator` is required for threshold and anomaly detection monitors.

# Valid values are "Above", "AboveOrEqual", "Below", "BelowOrEqual".

operator = "Above"

range_minutes = 5

# `threshold` is required for threshold monitors

threshold = 1

# `compare_days` and `tolerance` are required for anomaly detection monitors.

# Uncomment the two lines below for anomaly detection monitors.

# compare_days = 7

# tolerance = 25

notifier_ids = [

axiom_notifier.test_slack_notifier.id

]

alert_on_no_data = false

notify_by_group = false

}

```

This example creates a monitor using the dataset `test_dataset` and the notifier `test_slack_notifier`. These are resources you have created and accessed in the sections above.

* Customize the `name` and the `description` fields.

* In the `apl_query` field, specify the APL query for the monitor.

For more information on these fields, see the [documentation in the Terraform Registry](https://registry.terraform.io/providers/axiomhq/axiom/latest/resources/monitor).

### Create user

To create a user in Axiom using the provider, add the following code to your Terraform configuration file. Customize the `name`, `email`, and `role` fields.

```hcl

resource "axiom_user" "test_user" {

name = "test_user"

email = "test@abc.com"

role = "user"

}

```

## Access existing resources

### Access existing dataset

To access an existing dataset, follow these steps:

1. Determine the ID of the Axiom dataset by sending a GET request to the [`datasets` endpoint of the Axiom API](/restapi/endpoints/getDatasets).

2. Add the following code to your Terraform configuration file. Replace `DATASET_ID` with the ID of the Axiom dataset.

```hcl

data "axiom_dataset" "test_dataset" {

id = "DATASET_ID"

}

```

### Access existing notifier

To access an existing notifier, follow these steps:

1. Determine the ID of the Axiom notifier by sending a GET request to the `notifiers` endpoint of the Axiom API.

2. Add the following code to your Terraform configuration file. Replace `NOTIFIER_ID` with the ID of the Axiom notifier.

```hcl

data "axiom_dataset" "test_slack_notifier" {

id = "NOTIFIER_ID"

}

```

### Access existing monitor

To access an existing monitor, follow these steps:

1. Determine the ID of the Axiom monitor by sending a GET request to the `monitors` endpoint of the Axiom API.

2. Add the following code to your Terraform configuration file. Replace `MONITOR_ID` with the ID of the Axiom monitor.

```hcl

data "axiom_monitor" "test_monitor" {

id = "MONITOR_ID"

}

```

### Access existing user

To access an existing user, follow these steps:

1. Determine the ID of the Axiom user by sending a GET request to the `users` endpoint of the Axiom API.

2. Add the following code to your Terraform configuration file. Replace `USER_ID` with the ID of the Axiom user.

```hcl

data "axiom_user" "test_user" {

id = "USER_ID"

}

```

# Connect Axiom with Vercel

Source: https://axiom.co/docs/apps/vercel

Easily monitor data from requests, functions, and web vitals in one place to get the deepest observability experience for your Vercel projects.

Connect Axiom with Vercel to get the deepest observability experience for your Vercel projects.

Easily monitor data from requests, functions, and web vitals in one place. 100% live and 100% of your data, no sampling.

Axiom’s Vercel app ships with a pre-built dashboard and pre-installed monitors so you can be in complete control of your projects with minimal effort.

If you use Axiom Vercel integration, [annotations](/query-data/annotate-charts) are automatically created for deployments.

## What is Vercel?

Vercel is a platform for frontend frameworks and static sites, built to integrate with your headless content, commerce, or database.

Vercel provides a frictionless developer experience to take care of the hard things: deploying instantly, scaling automatically, and serving personalized content around the globe.

Vercel makes it easy for frontend teams to develop, preview, and ship delightful user experiences, where performance is the default.

## Send logs to Axiom

Simply install the [Axiom Vercel app from here](https://vercel.com/integrations/axiom) and be streaming logs and web vitals within minutes!

## App Overview

### Request and function logs

For both requests and serverless functions, Axiom automatically installs a [log drain](https://vercel.com/blog/log-drains) in your Vercel account to capture data live.

As users interact with your website, various logs will be produced. Axiom captures all these logs and ingests them into the `vercel` dataset. You can stream and analyze these logs live, or use our pre-build Vercel Dashboard to get an overview of all the important metrics. When you’re ready, you can fork our dashboard and start building your own!

For function logs, if you call `console.log`, `console.warn` or `console.error` in your function, the output will also be captured and made available as part of the log. You can use our extended query language, APL, to easily search these logs.

## Web vitals

Axiom supports capturing and analyzing Web Vital data directly from your user’s browser without any sampling and with more data than is available elsewhere. It is perfect to pair with Vercel’s in-built analytics when you want to get really deep into a specific problem or debug issues with a specific audience (user-agent, location, region, etc).

## Start logging Netlify Sites today

Axiom Netlify integration allows you to monitor, and log all of your sites, and apps in one place. With the Axiom app, you can quickly detect site errors, and get high-level insights into your Netlify projects.

* We welcome ideas, feedback, and collaboration, join us in our [Discord Community](http://axiom.co/discord) to share them with us.

# Connect Axiom with Tailscale

Source: https://axiom.co/docs/apps/tailscale

This page explains how to integrate Axiom with Tailscale.

Tailscale is a secure networking solution that allows you to create and manage a private network (tailnet), securely connecting all your devices.

Integrating Axiom with Tailscale allows you to stream your audit and network flow logs directly to Axiom seamlessly, unlocking powerful insights and analysis. Whether you’re conducting a security audit, optimizing performance, or ensuring compliance, Axiom’s Tailscale dashboard equips you with the tools to maintain a secure and efficient network, respond quickly to potential issues, and make informed decisions about your network configuration and usage.

## Prerequisites

* [Create an Axiom account](https://app.axiom.co/register).

* [Create a dataset in Axiom](/reference/datasets#create-dataset) where you send your data.

* [Create an API token in Axiom](/reference/tokens) with permissions to update the dataset you have created.

- [Create a Tailscale account](https://login.tailscale.com/start).

## Setup

1. In Tailscale, go to the [configuration logs page](https://login.tailscale.com/admin/logs) of the admin console.

2. Add Axiom as a configuration log streaming destination in Tailscale. For more information, see the [Tailscale documentation](https://tailscale.com/kb/1255/log-streaming?q=stream#add-a-configuration-log-streaming-destination).

## Tailscale dashboard

Axiom displays the data it receives in a pre-built Tailscale dashboard that delivers immediate, actionable insights into your tailnet’s activity and health.

This comprehensive overview includes:

* **Log type distribution**: Understand the balance between configuration audit logs and network flow logs over time.

* **Top actions and hosts**: Identify the most common network actions and most active devices.

* **Traffic visualization**: View physical, virtual, and exit traffic patterns for both sources and destinations.

* **User activity tracking**: Monitor actions by user display name, email, and ID for security audits and compliance.

* **Configuration log stream**: Access a detailed audit trail of all configuration changes.

With these insights, you can:

* Quickly identify unusual network activity or traffic patterns.

* Track configuration changes and user actions.

* Monitor overall network health and performance.

* Investigate specific events or users as needed.

* Understand traffic distribution across your tailnet.

# Connect Axiom with Terraform

Source: https://axiom.co/docs/apps/terraform

Provision and manage Axiom resources such as datasets and monitors with Terraform.

Axiom Terraform Provider lets you provision and manage Axiom resources (datasets, notifiers, monitors, and users) with Terraform. This means that you can programmatically create resources, access existing ones, and perform further infrastructure automation tasks.

Install the Axiom Terraform Provider from the [Terraform Registry](https://registry.terraform.io/providers/axiomhq/axiom/latest). To see the provider in action, check out the [example](https://github.com/axiomhq/terraform-provider-axiom/blob/main/example/main.tf).

This guide explains how to install the provider and perform some common procedures such as creating new resources and accessing existing ones. For the full API reference, see the [documentation in the Terraform Registry](https://registry.terraform.io/providers/axiomhq/axiom/latest/docs).

## Prerequisites

* [Sign up for a free Axiom account](https://app.axiom.co/register). All you need is an email address.

* [Create an advanced API token in Axiom](/reference/tokens#create-advanced-api-token) with the permissions to perform the actions you want to use Terraform for. For example, to use Terraform to create and update datasets, create the advanced API token with these permissions.

* [Create a Terraform account](https://app.terraform.io/signup/account).

* [Install the Terraform CLI](https://developer.hashicorp.com/terraform/cli).

## Install the provider

To install the Axiom Terraform Provider from the [Terraform Registry](https://registry.terraform.io/providers/axiomhq/axiom/latest), follow these steps:

1. Add the following code to your Terraform configuration file. Replace `API_TOKEN` with the Axiom API token you have generated. For added security, store the API token in an environment variable.

```hcl

terraform {

required_providers {

axiom = {

source = "axiomhq/axiom"

}

}

}

provider "axiom" {

api_token = "API_TOKEN"

}

```

2. In your terminal, go to the folder of your main Terraform configuration file, and then run the command `terraform init`.

## Create new resources

### Create dataset

To create a dataset in Axiom using the provider, add the following code to your Terraform configuration file. Customize the `name` and `description` fields.

```hcl

resource "axiom_dataset" "test_dataset" {

name = "test_dataset"

description = "This is a test dataset created by Terraform."

}

```

### Create notifier

To create a Slack notifier in Axiom using the provider, add the following code to your Terraform configuration file. Replace `SLACK_URL` with the webhook URL from your Slack instance. For more information on obtaining this URL, see the [Slack documentation](https://api.slack.com/messaging/webhooks).

```hcl

resource "axiom_notifier" "test_slack_notifier" {

name = "test_slack_notifier"

properties = {

slack = {

slack_url = "SLACK_URL"

}

}

}

```

To create a Discord notifier in Axiom using the provider, add the following code to your Terraform configuration file.

* Replace `DISCORD_CHANNEL` with the webhook URL from your Discord instance. For more information on obtaining this URL, see the [Discord documentation](https://discord.com/developers/resources/webhook).

* Replace `DISCORD_TOKEN` with your Discord API token. For more information on obtaining this token, see the [Discord documentation](https://discord.com/developers/topics/oauth2).

```hcl

resource "axiom_notifier" "test_discord_notifier" {

name = "test_discord_notifier"

properties = {

discord = {

discord_channel = "DISCORD_CHANNEL"

discord_token = "DISCORD_TOKEN"

}

}

}

```

To create an email notifier in Axiom using the provider, add the following code to your Terraform configuration file. Replace `EMAIL1` and `EMAIL2` with the email addresses you want to notify.

```hcl

resource "axiom_notifier" "test_email_notifier" {

name = "test_email_notifier"

properties = {

email= {

emails = ["EMAIL1","EMAIL2"]

}

}

}

```

For more information on the types of notifier you can create, see the [documentation in the Terraform Registry](https://registry.terraform.io/providers/axiomhq/axiom/latest/resources/notifier).

### Create monitor

To create a monitor in Axiom using the provider, add the following code to your Terraform configuration file and customize it:

```hcl

resource "axiom_monitor" "test_monitor" {

depends_on = [axiom_dataset.test_dataset, axiom_notifier.test_slack_notifier]

# `type` can be one of the following:

# - "Threshold": For numeric values against thresholds. It requires `operator` and `threshold`.

# - "MatchEvent": For detecting specific events. It doesn’t require `operator` and `threshold`.

# - "AnomalyDetection": For detecting anomalies. It requires `compare_days` and `tolerance, operator`.

type = "Threshold"

name = "test_monitor"

description = "This is a test monitor created by Terraform."

apl_query = "['test_dataset'] | summarize count() by bin_auto(_time)"

interval_minutes = 5

# `operator` is required for threshold and anomaly detection monitors.

# Valid values are "Above", "AboveOrEqual", "Below", "BelowOrEqual".

operator = "Above"

range_minutes = 5

# `threshold` is required for threshold monitors

threshold = 1

# `compare_days` and `tolerance` are required for anomaly detection monitors.

# Uncomment the two lines below for anomaly detection monitors.

# compare_days = 7

# tolerance = 25

notifier_ids = [

axiom_notifier.test_slack_notifier.id

]

alert_on_no_data = false

notify_by_group = false

}

```

This example creates a monitor using the dataset `test_dataset` and the notifier `test_slack_notifier`. These are resources you have created and accessed in the sections above.

* Customize the `name` and the `description` fields.

* In the `apl_query` field, specify the APL query for the monitor.

For more information on these fields, see the [documentation in the Terraform Registry](https://registry.terraform.io/providers/axiomhq/axiom/latest/resources/monitor).

### Create user

To create a user in Axiom using the provider, add the following code to your Terraform configuration file. Customize the `name`, `email`, and `role` fields.

```hcl

resource "axiom_user" "test_user" {

name = "test_user"

email = "test@abc.com"

role = "user"

}

```

## Access existing resources

### Access existing dataset

To access an existing dataset, follow these steps:

1. Determine the ID of the Axiom dataset by sending a GET request to the [`datasets` endpoint of the Axiom API](/restapi/endpoints/getDatasets).

2. Add the following code to your Terraform configuration file. Replace `DATASET_ID` with the ID of the Axiom dataset.

```hcl

data "axiom_dataset" "test_dataset" {

id = "DATASET_ID"

}

```

### Access existing notifier

To access an existing notifier, follow these steps:

1. Determine the ID of the Axiom notifier by sending a GET request to the `notifiers` endpoint of the Axiom API.

2. Add the following code to your Terraform configuration file. Replace `NOTIFIER_ID` with the ID of the Axiom notifier.

```hcl

data "axiom_dataset" "test_slack_notifier" {

id = "NOTIFIER_ID"

}

```

### Access existing monitor

To access an existing monitor, follow these steps:

1. Determine the ID of the Axiom monitor by sending a GET request to the `monitors` endpoint of the Axiom API.

2. Add the following code to your Terraform configuration file. Replace `MONITOR_ID` with the ID of the Axiom monitor.

```hcl

data "axiom_monitor" "test_monitor" {

id = "MONITOR_ID"

}

```

### Access existing user

To access an existing user, follow these steps:

1. Determine the ID of the Axiom user by sending a GET request to the `users` endpoint of the Axiom API.

2. Add the following code to your Terraform configuration file. Replace `USER_ID` with the ID of the Axiom user.

```hcl

data "axiom_user" "test_user" {

id = "USER_ID"

}

```

# Connect Axiom with Vercel

Source: https://axiom.co/docs/apps/vercel

Easily monitor data from requests, functions, and web vitals in one place to get the deepest observability experience for your Vercel projects.

Connect Axiom with Vercel to get the deepest observability experience for your Vercel projects.

Easily monitor data from requests, functions, and web vitals in one place. 100% live and 100% of your data, no sampling.

Axiom’s Vercel app ships with a pre-built dashboard and pre-installed monitors so you can be in complete control of your projects with minimal effort.

If you use Axiom Vercel integration, [annotations](/query-data/annotate-charts) are automatically created for deployments.

## What is Vercel?

Vercel is a platform for frontend frameworks and static sites, built to integrate with your headless content, commerce, or database.

Vercel provides a frictionless developer experience to take care of the hard things: deploying instantly, scaling automatically, and serving personalized content around the globe.

Vercel makes it easy for frontend teams to develop, preview, and ship delightful user experiences, where performance is the default.

## Send logs to Axiom

Simply install the [Axiom Vercel app from here](https://vercel.com/integrations/axiom) and be streaming logs and web vitals within minutes!

## App Overview

### Request and function logs

For both requests and serverless functions, Axiom automatically installs a [log drain](https://vercel.com/blog/log-drains) in your Vercel account to capture data live.

As users interact with your website, various logs will be produced. Axiom captures all these logs and ingests them into the `vercel` dataset. You can stream and analyze these logs live, or use our pre-build Vercel Dashboard to get an overview of all the important metrics. When you’re ready, you can fork our dashboard and start building your own!

For function logs, if you call `console.log`, `console.warn` or `console.error` in your function, the output will also be captured and made available as part of the log. You can use our extended query language, APL, to easily search these logs.

## Web vitals

Axiom supports capturing and analyzing Web Vital data directly from your user’s browser without any sampling and with more data than is available elsewhere. It is perfect to pair with Vercel’s in-built analytics when you want to get really deep into a specific problem or debug issues with a specific audience (user-agent, location, region, etc).

...

);

}

```

Logged in

; } ``` ### Server Components For Server Components, create a logger and make sure to call flush before returning: ```js import { Logger } from 'next-axiom'; export default async function ServerComponent() { const log = new Logger(); log.info('User logged in', { userId: 42 }); // ... other operations ... await log.flush(); returnLogged in

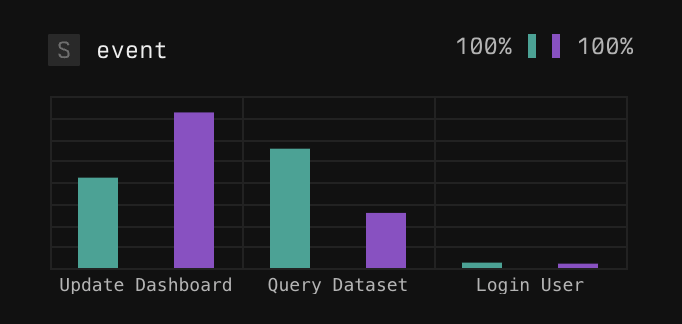

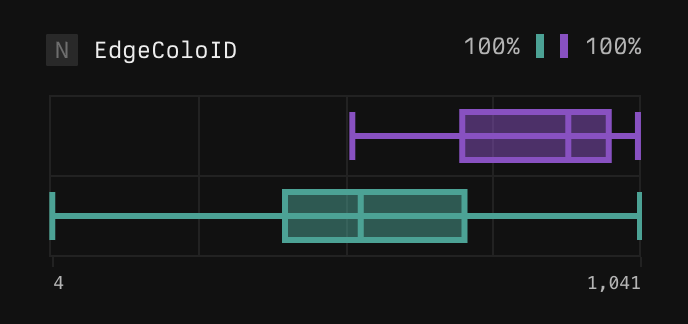

; } ``` ### Route Handlers For Route Handlers, wrapping your Route Handlers in `withAxiom` will add a logger to your request and automatically log exceptions: ```js import { withAxiom, AxiomRequest } from 'next-axiom'; export const GET = withAxiom((req: AxiomRequest) => { req.log.info('Login function called'); // You can create intermediate loggers const log = req.log.with({ scope: 'user' }); log.info('User logged in', { userId: 42 }); return NextResponse.json({ hello: 'world' }); }); ``` ## Use Next.js 12 for Web Vitals If you’re using Next.js version 12, follow the instructions below to integrate Axiom for logging and capturing Web Vitals data. In your `pages/_app.js` or `pages/_app.ts` and add the following line: ```js export { reportWebVitals } from 'next-axiom'; ``` ## Upgrade to Next.js 13 from Next.js 12 If you plan on upgrading to Next.js 13, you'll need to make specific changes to ensure compatibility: * Upgrade the next-axiom package to version `1.0.0` or higher: * Make sure any exported variables have the `NEXT_PUBLIC_ prefix`, for example,, `NEXT_PUBLIC_AXIOM_TOKEN`. * In client components, use the `useLogger` hook instead of the `log` prop. * For server-side components, you need to create an instance of the `Logger` and flush the logs before the component returns. * For Web Vitals tracking, you'll replace the previous method of capturing data. Remove the `reportWebVitals()` line and instead integrate the `AxiomWebVitals` component into your layout. ## Vercel Function logs 4KB limit The Vercel 4KB log limit refers to a restriction placed by Vercel on the size of log output generated by serverless functions running on their platform. The 4KB log limit means that each log entry produced by your function should be at most 4 Kilobytes in size. If your log output is larger than 4KB, you might experience truncation or missing logs. To log above this limit, you can send your function logs using [next-axiom](https://github.com/axiomhq/next-axiom). ## Parse JSON on the message field If you use a logging library in your Vercel project that prints JSON, your **message** field will contain a stringified and therefore escaped JSON object. * If your Vercel logs are encoded as JSON, they will look like this: ```json { "level": "error", "message": "{ \"message\": \"user signed in\", \"metadata\": { \"userId\": 2234, \"signInType\": \"sso-google\" }}", "request": { "host": "www.axiom.co", "id": "iad1:iad1::sgh2r-1655985890301-f7025aa764a9", "ip": "199.16.157.13", "method": "GET", "path": "/sign-in/google", "scheme": "https", "statusCode": 500, "teamName": "AxiomHQ", }, "vercel": { "deploymentId": "dpl_7UcdgdgNsdgbcPY3Lg6RoXPfA6xbo8", "deploymentURL": "axiom-bdsgvweie6au-axiomhq.vercel.app", "projectId": "prj_TxvF2SOZdgdgwJ2OBLnZH2QVw7f1Ih7", "projectName": "axiom-co", "region": "iad1", "route": "/signin/[id]", "source": "lambda-log" } } ``` * The **JSON** data in your **message** would be: ```json { "message": "user signed in", "metadata": { "userId": 2234, "signInType": "sso-google" } } ``` You can **parse** the JSON using the [parse\_json function](/apl/scalar-functions/string-functions#parse-json\(\)) and run queries against the **values** in the **message** field. ### Example ```kusto ['vercel'] | extend parsed = parse_json(message) ``` * You can select the field to **insert** into new columns using the [project operator](/apl/tabular-operators/project-operator) ```kusto ['vercel'] | extend parsed = parse_json('{"message":"user signed in", "metadata": { "userId": 2234, "SignInType": "sso-google" }}') | project parsed["message"] ``` ### More Examples * If you have **null values** in your data you can use the **isnotnull()** function ```kusto ['vercel'] | extend parsed = parse_json(message) | where isnotnull(parsed) | summarize count() by parsed["message"], parsed["metadata"]["userId"] ``` * Check out our [APL Documentation on how to use more functions](/apl/scalar-functions/string-functions) and run your own queries against your Vercel logs. ## Migrate from Vercel app to next-axiom In May 2024, Vercel [introduced higher costs](https://axiom.co/blog/changes-to-vercel-log-drains) for using Vercel Log Drains. Because the Axiom Vercel app depends on Log Drains, using the next-axiom library can be the cheaper option to analyze telemetry data for higher volume projects. To migrate from the Axiom Vercel app to the next-axiom library, follow these steps: 1. Delete the existing log drain from your Vercel project. 2. Delete `NEXT_PUBLIC_AXIOM_INGEST_ENDPOINT` from the environment variables of your Vercel project. For more information, see the [Vercel documentation](https://vercel.com/projects/environment-variables). 3. [Create a new dataset in Axiom](/reference/datasets), and [create a new advanced API token](/reference/tokens) with ingest permissions for that dataset. 4. Add the following environment variables to your Vercel project: * `NEXT_PUBLIC_AXIOM_DATASET` is the name of the Axiom dataset where you want to send data. * `NEXT_PUBLIC_AXIOM_TOKEN` is the Axiom API token you have generated. 5. In your terminal, go to the root folder of your Next.js app, and then run `npm install --save next-axiom` to install the latest version of next-axiom. 6. In the `next.config.ts` file, wrap your Next.js configuration in `withAxiom`: ```js const { withAxiom } = require('next-axiom'); module.exports = withAxiom({ // Your existing configuration }); ``` For more configuration options, see the [documentation in the next-axiom GitHub repository](https://github.com/axiomhq/next-axiom). ## Send logs from Vercel preview deployments To send logs from Vercel preview deployments to Axiom, enable preview deployments for the environment variable `NEXT_PUBLIC_AXIOM_INGEST_ENDPOINT`. For more information, see the [Vercel documentation](https://vercel.com/docs/projects/environment-variables/managing-environment-variables). # Intelligence Source: https://axiom.co/docs/console/intelligence Learn about Axiom's intelligence features that accelerate insights and automate data analysis. Axiom’s Console is evolving from a powerful space for human-led investigation to incorporate assistance that helps you reach insights faster. By combining Axiom's cost-efficient data platform with intelligence features, Axiom is transforming common workflows from reactive chores into proactive, machine-assisted actions. This section covers the intelligence features built into the Axiom Console. ## Spotlight Spotlight is an interactive analysis feature that helps you find the root cause faster. Rather than reviewing data from a notable period event-by-event, Spotlight allows you to compare a subset against baseline automatically. Spotlight analyzes every field to surface the most significant differences, turning manual investigation into a fast, targeted discovery process. Learn more this feature in the [Spotlight](/console/intelligence/spotlight) page. ## AI-assisted workflows Axiom also includes several features that leverage AI to accelerate common workflows. ### Natural language querying Ask questions of your data in plain English and have Axiom translate your request directly into a valid Axiom Processing Language (APL) query. This lowers the barrier to exploring your data and makes it easier for everyone on your team to find the answers they need. Learn more about [generating queries using AI](/query-data/explore#generate-query-using-ai). ### AI-powered dashboard generation Instead of building dashboards from scratch, you can describe your requirements in natural language and have Axiom generate a complete dashboard for you instantly. Axiom analyzes your events and goals to select the most appropriate visualizations. Learn more about [generating dashboards using AI](/dashboards/create#generate-dashboards-using-ai). # Spotlight Source: https://axiom.co/docs/console/intelligence/spotlight This page explains how to use Spotlight to automatically identify differences between selected events and baseline data. Spotlight allows you to highlight a region of event data and automatically identify how it deviates from baseline across different fields. Instead of manually crafting queries to investigate anomalies, Spotlight analyzes every field in your data and presents the most significant differences through intelligent visualizations. Spotlight is particularly useful for: * **Root cause analysis**: Quickly identify why certain traces are slower, errors are occurring, or performance is degraded. * **Anomaly investigation**: Understand what makes problematic events different from normal baseline behavior. * **Pattern discovery**: Spot trends and correlations in your data that might not be immediately obvious. ## How Spotlight works Spotlight compares two sets of events: * **Comparison set**: The events you select by highlighting a region on a chart. * **Baseline set**: All other events that contributed to the chart. For each field present in your data, Spotlight calculates the differences between these two sets and ranks them by significance. The most interesting differences are displayed first using visualizations that adapt to your data types. ## Use Spotlight ### Start Spotlight analysis 1. In the **Query** tab, create a query that produces a heatmap or time series chart. 2. On the chart, click and drag to select the region you want to investigate. 3. In the selection tooltip, click **Run Spotlight**. Spotlight analyzes the selected events and displays the results in a new panel showing the most significant differences across all fields. Alternatively, start Spotlight from a table view by right-clicking on the value you want to select and choosing **Run spotlight**. ### Interpret results Spotlight displays results using two types of visualization, depending on your data: * **Bar charts** for categorical fields (strings, booleans) * Compares the proportion of events that have a given value for selected and baseline events. * Useful for understanding differences in status codes, service names, or boolean flags. * **Boxplots** for numeric fields (integers, floats, timespans) with many distinct values

* Shows the range of values in both comparison and baseline sets.

* Identifies the minimum, P25, P75, and maximum values.

* Useful for understanding differences in response times or other numeric quantities.

* **Boxplots** for numeric fields (integers, floats, timespans) with many distinct values

* Shows the range of values in both comparison and baseline sets.

* Identifies the minimum, P25, P75, and maximum values.

* Useful for understanding differences in response times or other numeric quantities.

For each visualization, Axiom displays the proportion of selected and baseline events (where the field is present).

### Dig deeper

To dig deeper, iteratively refine your Spotlight analysis or jump to a view of matching events.

1. **Filter and re-run**: Right-click specific values in the results and select **Re-run spotlight** to filter your data and run Spotlight again with a more focused scope.

2. **Show events**: Rick-click specific values in the results and select **Show events** to filter your data and see matching events.

## Spotlight limitations

* **Custom attributes**: Currently, custom attributes in OTel spans aren’t included in the Spotlight results. Axiom will soon support custom attributes in Spotlight.

* **Complex queries**: Spotlight works well for queries with maximum one aggregation step. Complex queries with multiple aggregations aren’t supported.

## Example workflows

### Investigate slow traces

1. Create a heatmap query:

```kusto

['traces']

| summarize histogram(duration, 20) by bin_auto(_time)

```

2. Select the region showing the slowest traces.

3. Run Spotlight to see if slow traces are associated with specific endpoints, regions, or user segments.

### Understand error spikes

1. Create a line chart:

```kusto

['logs']

| where level == "error"

| summarize count() by bin_auto(_time)

```

2. Select the time period where errors spiked.

3. Run Spotlight to identify if there's anything different about the selected errors.

# Configure dashboard elements

Source: https://axiom.co/docs/dashboard-elements/configure

This section explains how to configure dashboard elements.

When you create a chart, click

For each visualization, Axiom displays the proportion of selected and baseline events (where the field is present).

### Dig deeper

To dig deeper, iteratively refine your Spotlight analysis or jump to a view of matching events.

1. **Filter and re-run**: Right-click specific values in the results and select **Re-run spotlight** to filter your data and run Spotlight again with a more focused scope.

2. **Show events**: Rick-click specific values in the results and select **Show events** to filter your data and see matching events.

## Spotlight limitations